Reader Martin asks us for some help extracting embedded content from a submitted malicious document.

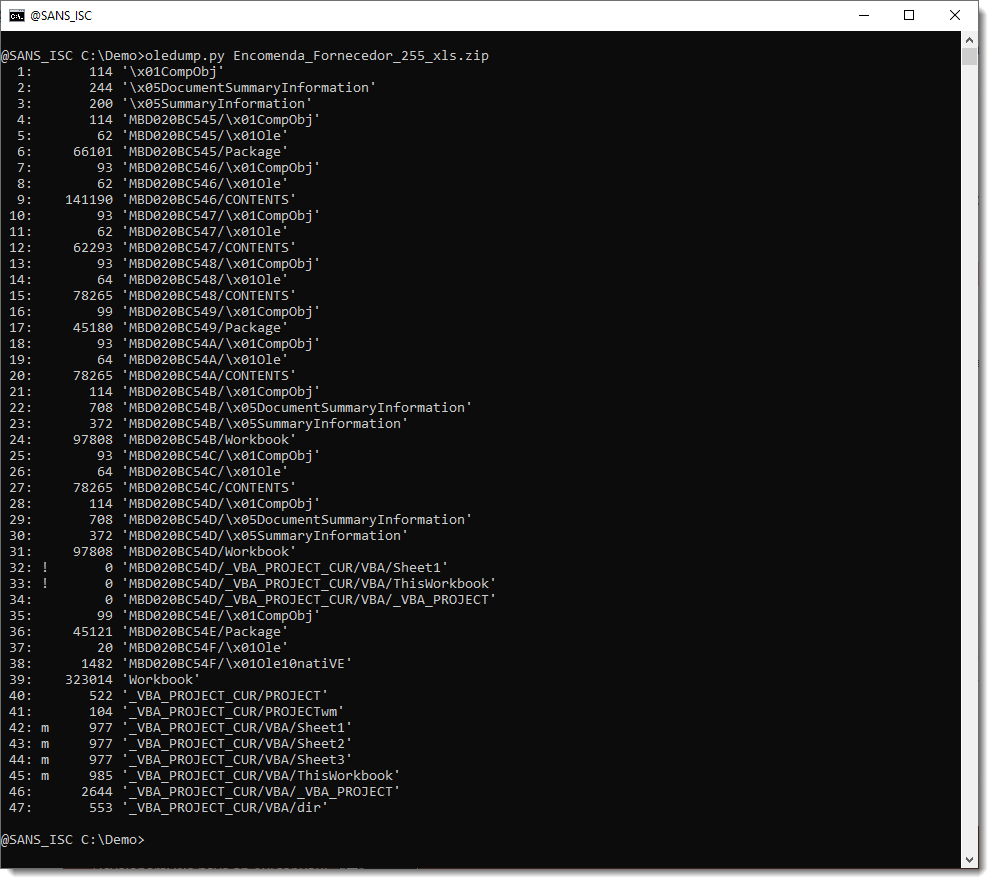

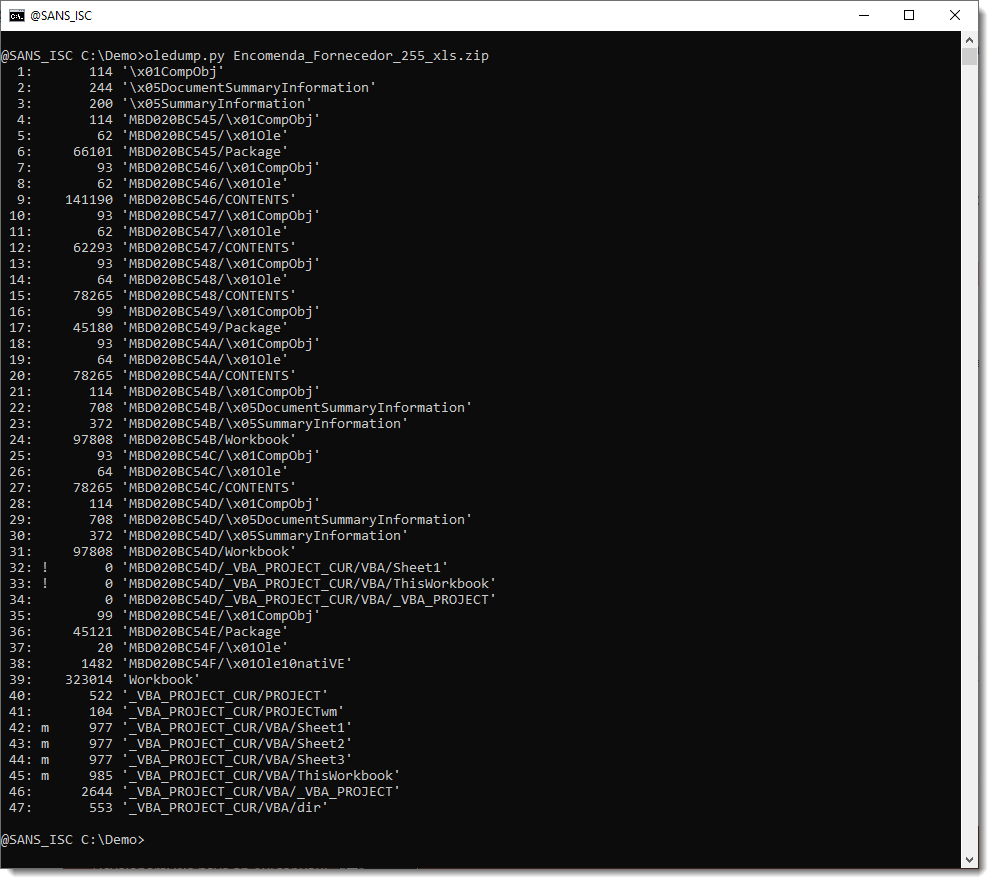

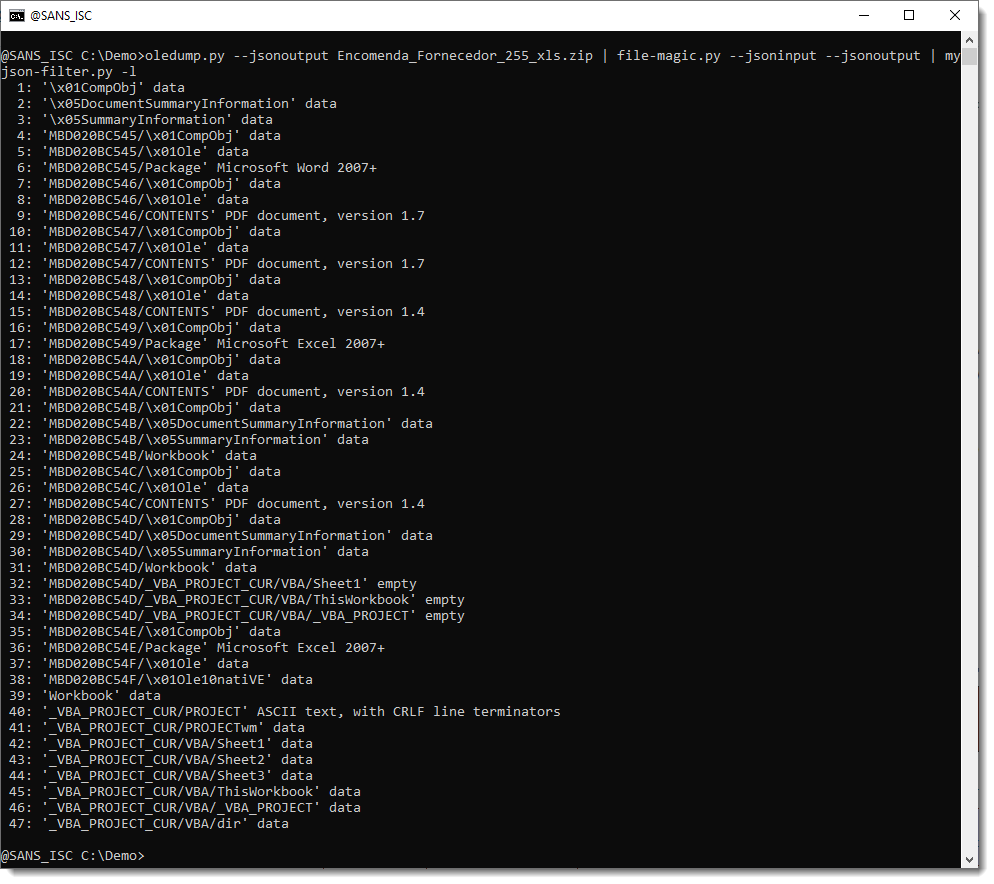

These are the streams inside the document, listed with oledump.py:

The streams to extract are those where the stream name includes Package, CONTENTS, ... .

This can be done with oledump as follows: oledump.py -s 6 -d sample.vir > extracted.vir

-s 6 selects stream 6, and -d produces a binary dump which is written to a file via file redirection (>).

This has to be repeated for every stream that could be interesting.

But I also have another method, that involves less repeated commands.

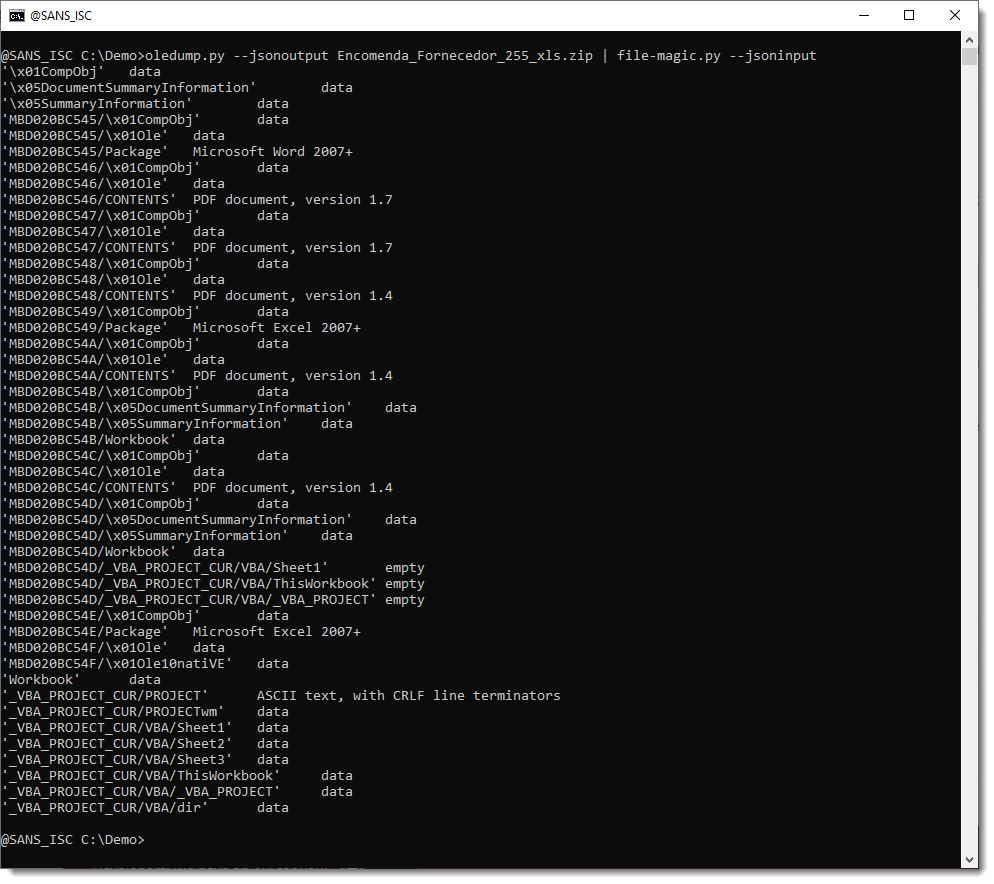

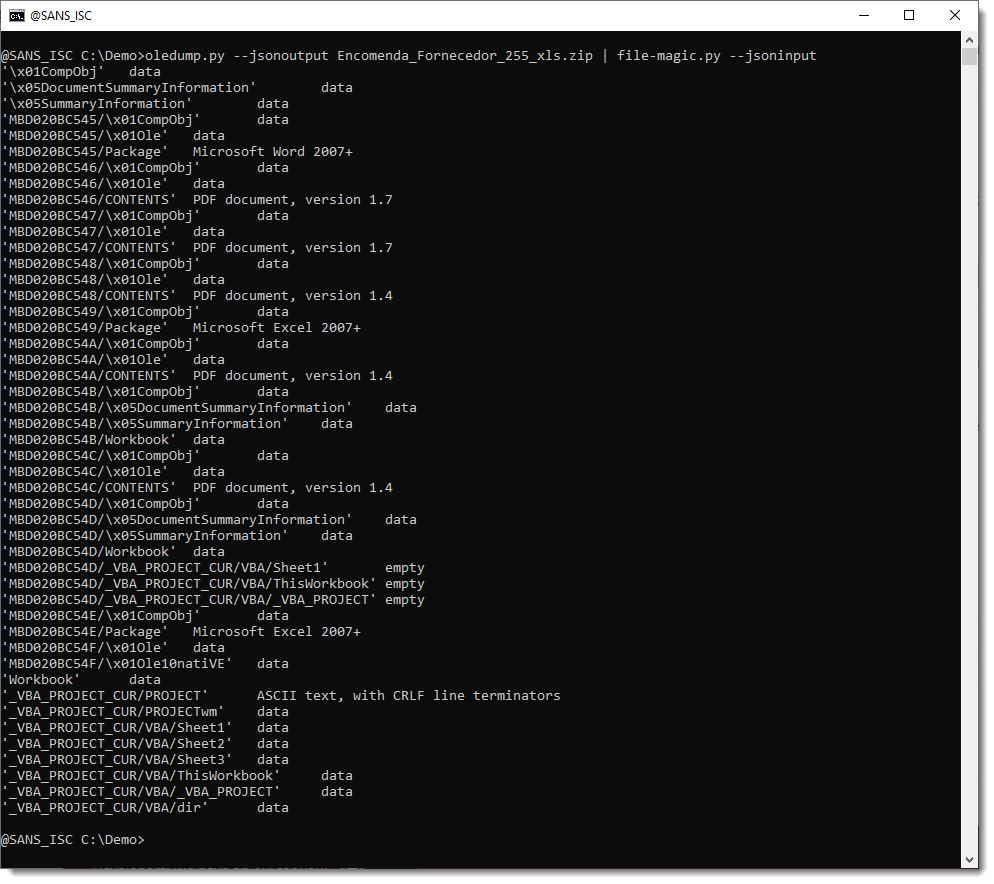

First, we let oledump.py analyze the file, and produce JSON output. This JSON output contains all the streams (id, name and content) and can be consumed by other tools I make, like file-magic.py, a tool to identify files based on their content.

Like this:

file-magic.py identified the content of each stream: data, Word, PDF, ...

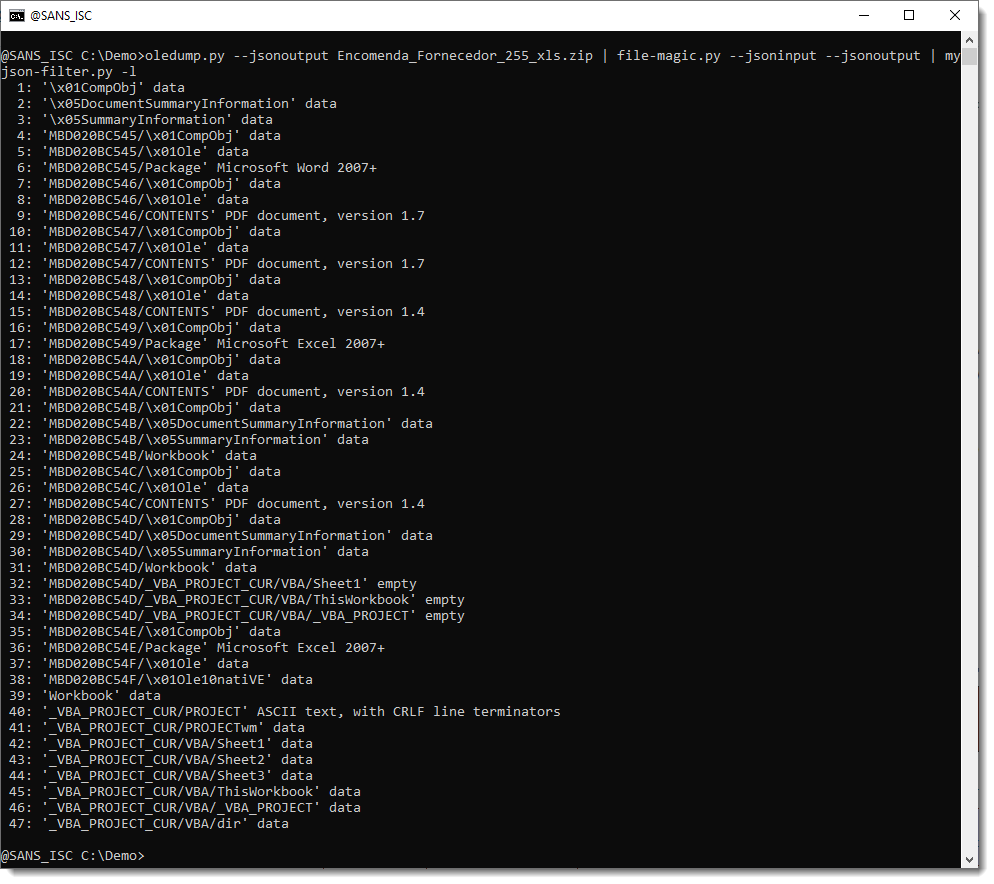

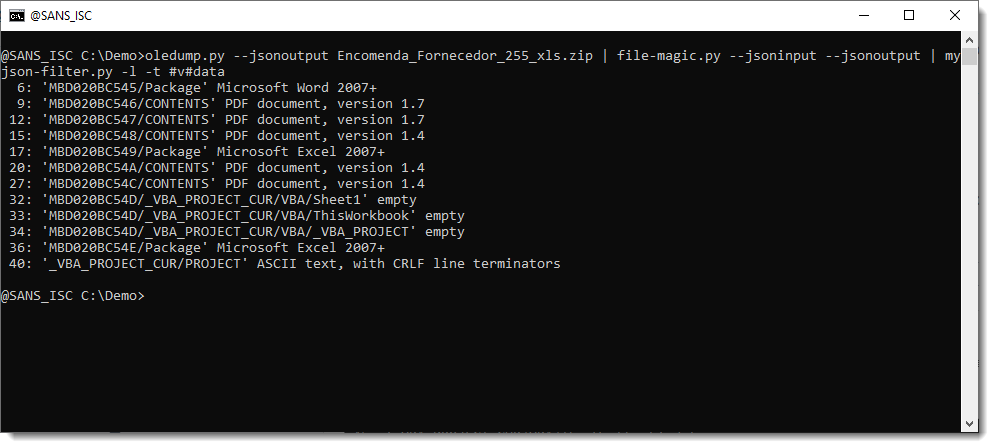

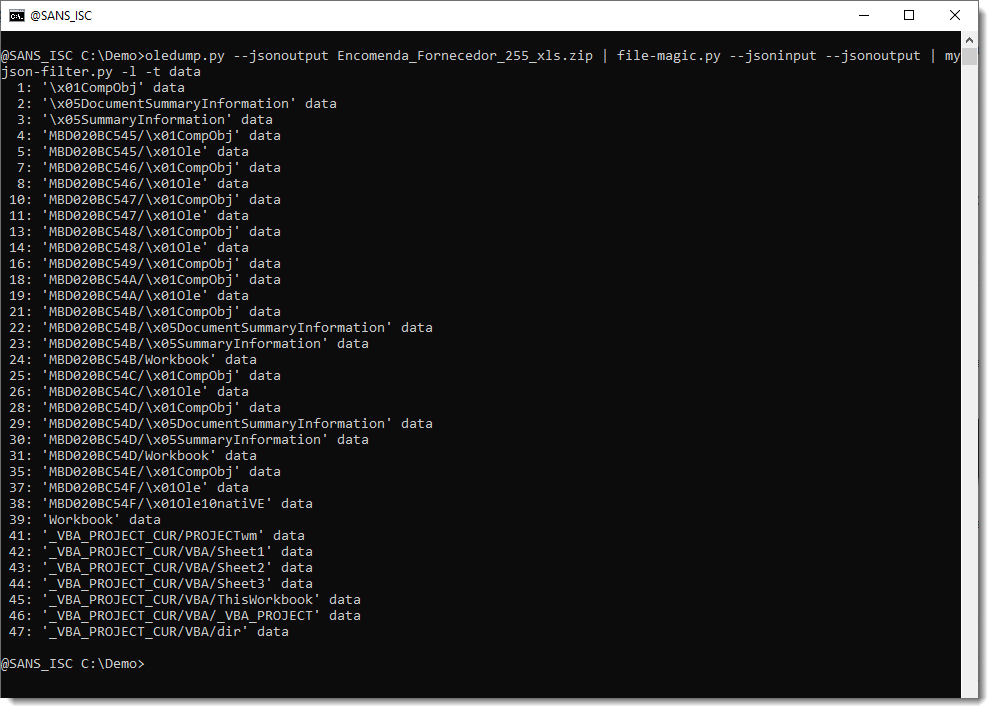

We can now let file-magic.py produce JSON output, that can then be filtered by another tool: myjson-filter.py:

By default, myjson-filter.py produces JSON output (filtered), but with option -l (--list), we obtain a list of the items and can easily observe what the effect of our filtering is (for the moment, we have not yet filtered).

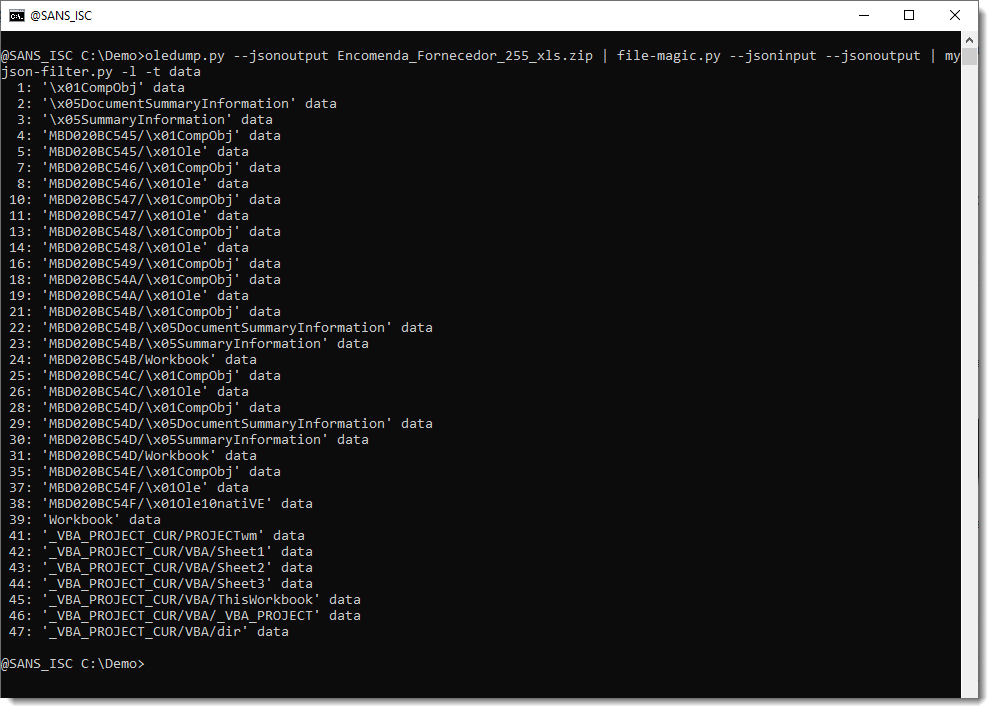

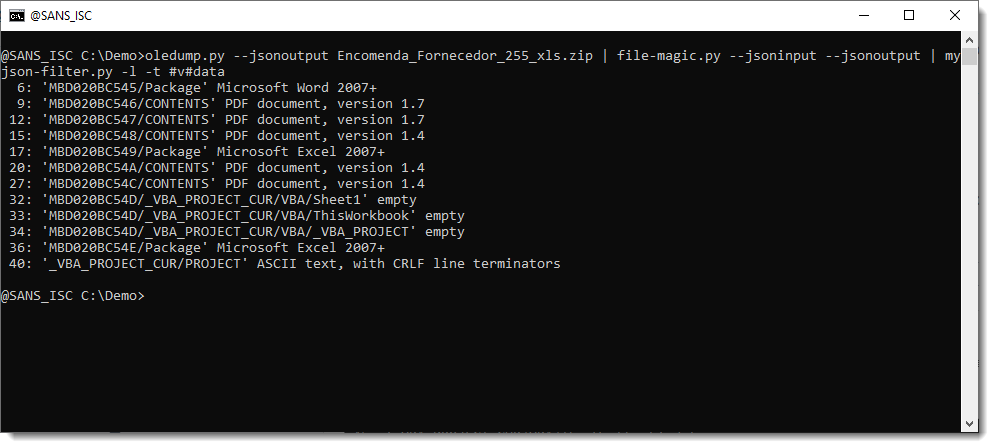

With option -t, we will filter by type (determined by file-magic.py). Option -t takes a regular expression that will be used to select types. Let's go with regular expression data:

At first, what is identified as just data, doesn't interest us. So we will reverse the selection (v), to select everything that isn't data, like this:

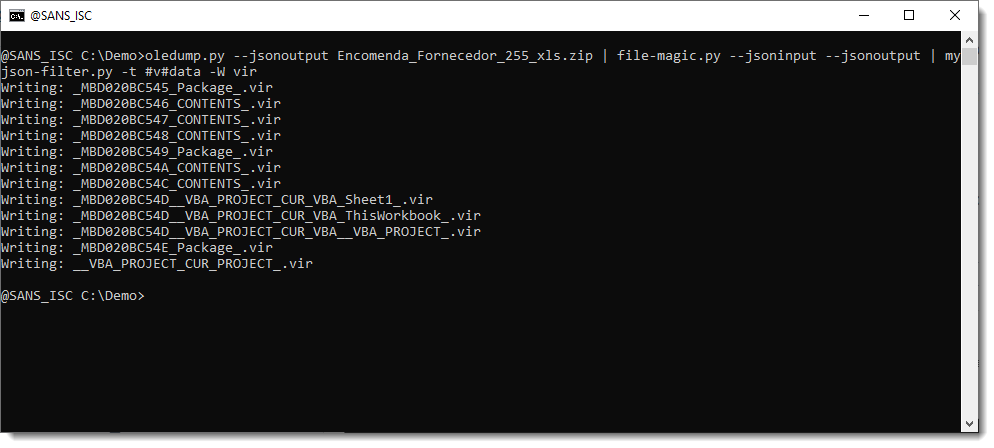

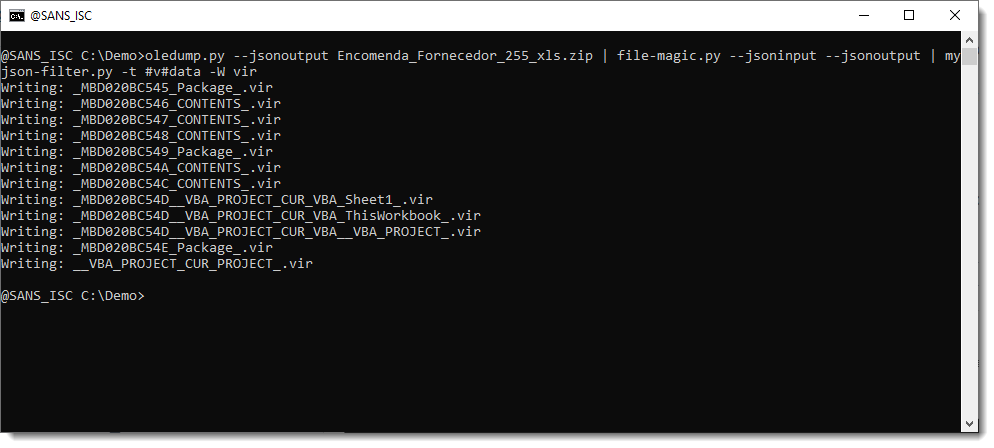

I justed added a new option to my myjson-filter.py tool, to easily write all selected items to disk as individual files: option -W (--write).

Option -W requires a value: vir, hash, hashvir or idvir. Value vir instructs my tool to create files with a filename that is the (cleaned) item name and with extension .vir. Like this:

So now we have written all streams to disk, that were identified as something else than just plain data.

If you don't find what you are looking for in these files, just use -t data to write all data files to disk, and see if you can find what you are looking for in these files.

For another example of my tools that support JSON, take a look at my blog post "Combining zipdump, file-magic And myjson-filter".

Didier Stevens

Senior handler

Microsoft MVP

blog.DidierStevens.com

Reader Martin asks us for some help extracting embedded content from a submitted malicious document.

These are the streams inside the document, listed with oledump.py:

The streams to extract are those where the stream name includes Package, CONTENTS, ... .

This can be done with oledump as follows: oledump.py -s 6 -d sample.vir > extracted.vir

-s 6 selects stream 6, and -d produces a binary dump which is written to a file via file redirection (>).

This has to be repeated for every stream that could be interesting.

But I also have another method, that involves less repeated commands.

First, we let oledump.py analyze the file, and produce JSON output. This JSON output contains all the streams (id, name and content) and can be consumed by other tools I make, like file-magic.py, a tool to identify files based on their content.

Like this:

file-magic.py identified the content of each stream: data, Word, PDF, ...

We can now let file-magic.py produce JSON output, that can then be filtered by another tool: myjson-filter.py:

By default, myjson-filter.py produces JSON output (filtered), but with option -l (--list), we obtain a list of the items and can easily observe what the effect of our filtering is (for the moment, we have not yet filtered).

With option -t, we will filter by type (determined by file-magic.py). Option -t takes a regular expression that will be used to select types. Let's go with regular expression data:

At first, what is identified as just data, doesn't interest us. So we will reverse the selection (v), to select everything that isn't data, like this:

I justed added a new option to my myjson-filter.py tool, to easily write all selected items to disk as individual files: option -W (--write).

Option -W requires a value: vir, hash, hashvir or idvir. Value vir instructs my tool to create files with a filename that is the (cleaned) item name and with extension .vir. Like this:

So now we have written all streams to disk, that were identified as something else than just plain data.

If you don't find what you are looking for in these files, just use -t data to write all data files to disk, and see if you can find what you are looking for in these files.

For another example of my tools that support JSON, take a look at my blog post "Combining zipdump, file-magic And myjson-filter".

Didier Stevens

Senior handler

Microsoft MVP

blog.DidierStevens.com